User researchers on government services typically have an enormous pool of people to draw upon. In HMRC or DVLA for example, a service might reach almost everyone in the UK over the age of 18. Designing for such a wide audience obviously presents a lot of diverse needs to consider, but actually finding people to conduct research with is rarely difficult.

Although it’s a public product, the primary audience for the Performance Platform is service managers, Ministers and other government officials interested in improving and comparing services. Here we have the opposite situation to the norm – a very small user group, although one with fairly similar needs. Add to that the fact that these are very busy people who are often not based in London, and you have quite a challenge.

To overcome it, we had to be smart with our time and in the way we gathered feedback.

Testing on the road

We started by making contact with licensing managers in local government. There are several licensing dashboards already available in the platform, so it's relevant to that group. These managers also share characteristics and motivations with officials in central government.

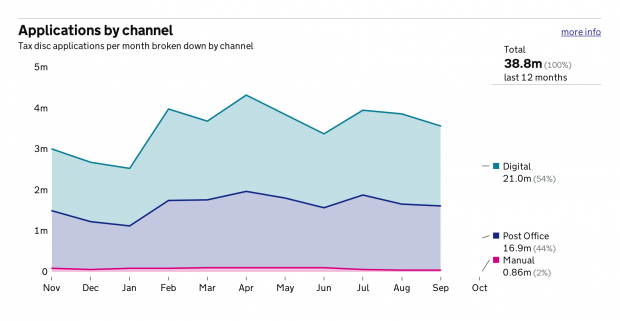

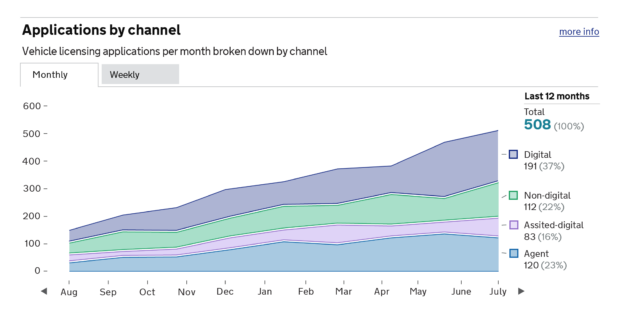

Our summer months were spent travelling about around the country, meeting interesting and dedicated licensing officers. This first pass of research uncovered that the stacked graph was being misread, the drop off module was confusing, and our labelling of many graphs was much too technical.

Going guerrilla

GDS shares offices with the Food Standards Agency, Medical Research Council and OFSTED – intelligent, numerate people for us to talk to and test things with.

We further developed our designs by grabbing these innocent civil servants in the office canteen as they were waiting for their food. The team showed them different versions of graphs and charts, asking them questions to see if what we were showing made sense, and if it could be easily read and understood. This kind of research is called guerrilla testing, and it’s a fast, low cost way to get lots of valuable feedback.

Remote control

To supplement our work on the ground we also tried remote testing with service managers. If anyone reading this is looking for a new product idea, then here’s a good one – a great tool for conducting remote user testing. We tried some very good screen sharing products, but it was difficult for our participants to interact remotely with our prototype without downloading software - something they often aren't able to do. We are still looking for better solutions to this problem than jumping on trains, so bright ideas are very welcome.

Next steps

As well as providing us with plenty of stories we can take on to improve the Performance Platform itself, the conversations we’ve had with users have revealed gaps in our understanding about what it’s like to work with GDS. What is it like to be a client of GDS in general, and the Performance Platform specifically? How can we make this a better experience? What does the Performance Platform mean to departments that are policy rather than transaction focused? How can we make the Government Service Design Manual more relevant to departments?

Finding answers to these questions is going to be the focus of our next phase of research. We are going to build a small team focused on the internal audience of GDS in Whitehall, to help set the direction of our work and future engagement with colleagues elsewhere in government.

Meanwhile, the campaign to find service manager research participants continues. If you would like to be involved, we’d love to hear from you. We pay in chocolate biscuits and a large amount of gratitude.