In December, the GOV.UK Accessibility Team published a blog post on how they improved accessibility on GOV.UK in preparation for the accessibility regulations deadline.

Data specialists in GOV.UK helped this work by creating an accessibility reporting tool to identify the accessibility issues among the half a million pages published on GOV.UK. Then, we contributed to finding fixes to some of the problems using data processing techniques.

Here’s how our work helped make GOV.UK more accessible.

Creating our accessibility reporting tool

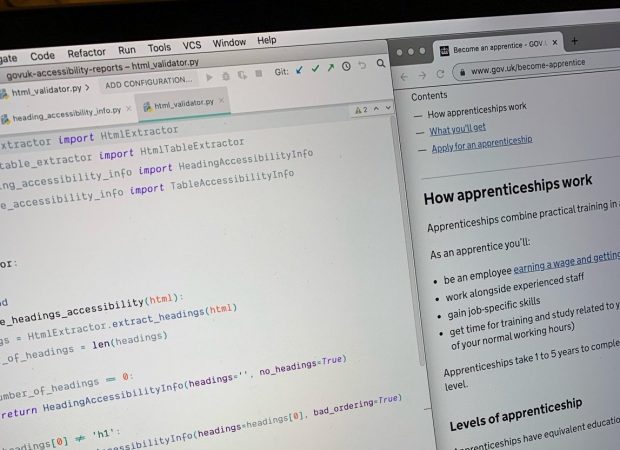

We created a tool that could analyse GOV.UK’s content to look for specific accessibility content issues. We wrote it in Python, as it’s a GDS-supported programming language, and structured the code in a way that allowed us to write and “plug in” different report generators.

This meant our tool could look at the half a million pages on GOV.UK and filter them based on a checklist of Web Content Accessibility Guidelines (WCAG) fails. We identified which fails to look for from a manual review of more than 60 representative pages (pages that are reflective of most of the site) of GOV.UK that the Accessibility Team did.

We created 8 different reports that identified pages which had:

- non-semantic headers - when text was incorrectly formatted as a header through bold font and mimicking the style of a heading, instead of being marked as a header in the code for machines such as screen readers to identify

- badly ordered headers - when the hierarchical structure of the page was broken with huge gaps between the ordering of headings; for example, a heading 1 followed by a heading 5

- duplicate titles - when a page title was the same as another, which indicated it was not unique and did not appropriately describe the purpose of the page

- non-compliant links - when there were multiple instances of the same piece of text that linked to different URLs; for example, link text for ‘starting a business’ in the first paragraph linked to ‘applying for a start-up loan’, whereas in the second paragraph ‘starting a business’ linked to ‘set up a business’

- non-English text that was labelled English

- non-English attachments that were labelled English

- non-HTML attachments

- badly structured tables - tables should always have column headers and sometimes row headers to explain the content, allowing for people who use screen readers to understand the table's structure

For the last report on table structures, we did some additional processing on this. Since 2019, we’ve set up Govspeak (our version of Markdown - the process of formatting content in Whitehall publisher) which allows this structure to be followed. However, pre-2019, there were tables that had already been added to pages and not correctly marked up. The analyser we built uses CSS selectors to find tables with (or without) specific HTML structures. As it uses CSS selectors, these queries could be re-used elsewhere to perform the same extraction.

For all the reports, we quality assured these outputs by manually checking they contained some of the 60 representative pages that were manually identified to not comply with WCAG.

Speeding up the report generation

Once we developed and tested report generation, we realised we wanted to speed up reporting to allow more time to contribute to fixing any problems.

We used multi-processing techniques to do this. Each process took a chunk of pages and analysed them. If the process found a page to have accessibility problems for a particular report, the page details and accessibility fail reason were added to a queue. Another process read data from the queue and generated a .csv file representing all of the identified inaccessible pages, which would be repeated for each accessibility report being run.

The tool and the multi-processing techniques saved us from doing a complicated and time-consuming manual process.

These reports were sorted by government department and the Accessibility Team could speak with the relevant content editors about the accessibility issues.

How we used programming to help fix things

Once we’d found the issues, we wanted to keep helping the Accessibility Team in their work in fixing the issues. We used programming to improve language detection, which saved the team time.

Mislabelled language pages

As written above, it is a WCAG fail to have a page labelled as being written in one language, when it is actually in a different language. This is because a screen reader trying to read English-labelled content would struggle to read Welsh words, for example.

We first tried to address this by using our code to send snippets of content to the Google Translate API, which we used to identify the language of content. This approach quickly became infeasible because of the large amount of content that needed its language identified and our limited time.

So, we changed tack. Instead, we used the langdetect Python package, as it identified language without making API calls. This significantly increased the speed with which we could identify the language; what would have taken 3 days now took 3 hours. Our colleagues could then spend more time resolving these language labelling issues.

Building on our work

We want to reuse and build on the work we’ve done to see where it can be used elsewhere. For example, the work on mislabelled language pages has been picked up by the Platform Health Team GOV.UK team who are looking to fix non-English pages that have English content.

We’ll continue to explore how this information about our content, or metadata, could potentially be stored and tracked so future WCAG fails could be proactively identified.

There are lots of ways to find out more about our work. You can subscribe to this blog to keep updated, contact the GOV.UK Data Labs Team or look at the GitHub repo.