The Performance Platform offers departments and agencies around 10 standard dashboard modules that display data on how their services are performing. We made a visit to the Ministry of Justice (MOJ) Prison Visits service last week to get feedback on how these modules are being used.

Our first product audit

The visit was the first product audit of the Performance Platform, a process we'll use to help prioritise the development of new platform features. Our user researcher and one of our developers attended the audit with me.

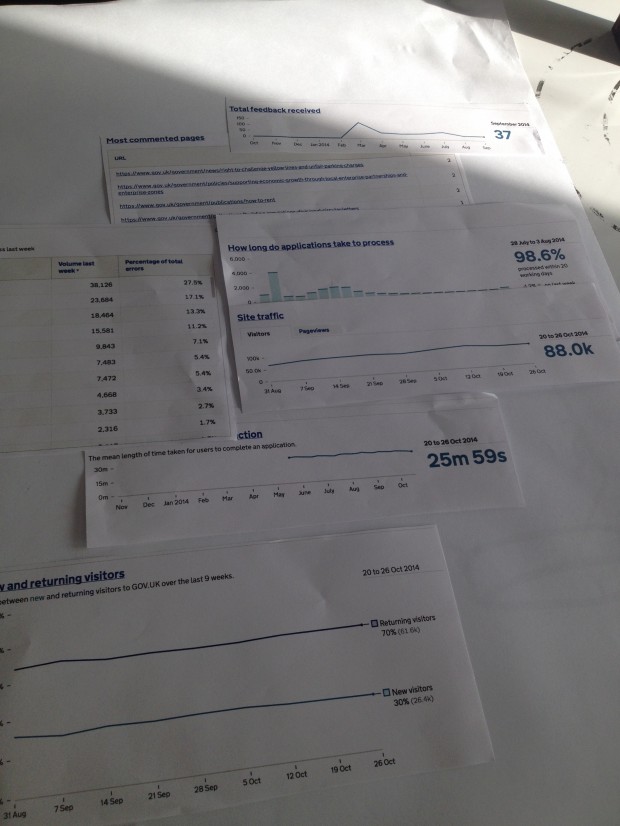

During the audit, we asked the Prison Visits service team to rate each module on a simple axis of ‘how often you use it’ vs ‘importance’. Their ratings challenged a few of our assumptions about the modules.

The completion rate module

For example, we assumed the most popular module would be completion rate. We assumed this because the completion rate of a service is the most important metric for service teams to get right. Users may be dissatisfied with a service, or may find it difficult to access a service with their mobile device but if they can’t complete a service, that’s a sure fail.

However, because Prison Visits is an established service, the completion rate is relatively consistent and is only interesting if it suddenly changes significantly. We can’t assume the most important module to always be the most popular.

The MOJ's most and least popular modules

Other ratings the service team gave to modules were less surprising to us. Their most popular modules were those that displayed data they lacked direct access to, like user satisfaction.

The modules rated least used and popular were the uptime and response time module, total cost module, transactions per year module and live service use module. This was due to the service team already having access to the data displayed in these modules, and often at a more granular level.

Live service use was the most unpopular module as the service manager didn’t have any concerns about lots of people using the service at the same time (due to the service’s limited number of users). If the service was a busier transaction, like the Vehicle Tax Renewals service, the module is likely to have been a lot more popular.

Unimportant data

An interesting point the service team made was the lack of middle ground between what they have found to be really important data and unimportant data. This is a point we understand - all data may not be useful to all service managers, but it may be useful for further exploration and analysis.

A service must be actively measured by a Performance Dashboard to pass the government Digital by Default Service Standard. Often these dashboards offer service managers actionable data they can use to improve their service. If some of the open data is not useful to them, it could be for third parties doing government analysis.

More product audits to come

We won't be making a final conclusion about prioritising features for development yet but the MOJ audit has been useful in feeding insight into our development process. Our team is already planning our next product audit with the team at the MOJ’s Lasting Power of Attorney service. We’ll blog again then about our findings.

If work like this sounds good to you, take a look at working for GDS. We’re usually searching for talented people to come and join the team.

Sign up now for email updates from this blog or subscribe to the feed.