I’m Catherine Hope, a Digital Performance Analyst for the Department for Work and Pensions. My job is to help teams creating digital services to measure their success, and to improve services based on data-driven evidence.

The services we design are assessed to the Government Digital Service (GDS) standards. We bring our development team together (virtually, now) to show a panel of digital colleagues from other departments what we’ve done, so they can assess whether we’ve met the standards.

I sometimes see feedback from an assessment that a service needs to consider a “Save and Return” function — where the user could get part way through the process and leave without losing all of the answers they’ve already provided.

Sounds like a great idea! Some of our services ask lots (and I mean LOTS) of questions about a person's lifestyle, health, or income. They include asking for information you might need to find out, rather than know off the top of your head.

GDS has a pattern for Creating Accounts, so we wouldn’t have to start from scratch.

Let’s put Save and Return on everything‽

It will make our services faster and easier for our users. They won’t have to answer the same questions again if they need to go away and come back. It might also improve the success rate for us, with less risk of someone needing to telephone us for help with our digital service.

But Save and Return isn’t free. We need to do some work to develop it. And there are other risks to consider too. People would need to be able to return to the same point, this means we would need to store their answers. These answers might contain personal data like names, national insurance numbers or bank account details, so we’d need to store this securely, and we’d need to be sure to only share answers again with the right person.

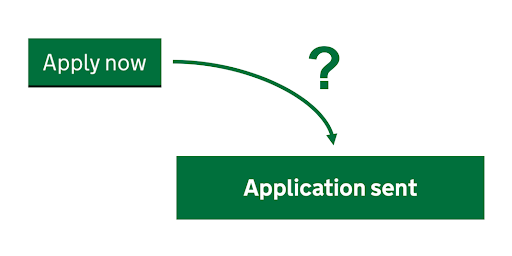

Users would need to be able to identify themselves to us, and giving us information to allow them to return will “cost” the user in terms of number of interactions. Signing in might put people off using the digital service, because it sounds too complicated. They might phone us instead, which could take longer for them, and for us.

In an ideal world, our services would be really easy to use, with just a few essential questions, and we wouldn’t need people to have to return to their answers because they wouldn’t leave before the end. But it’s not an ideal world — there could be many reasons that people leave a service.

How do we know whether there is a user need for a Save and Return function?

Not just a business want, but a user need.

As a data person I translate that question into “How could we measure whether we need a Save and Return function?”. This evidence can be considered alongside user research findings.

The Department for Work and Pensions (DWP) recently hosted a cross-government meeting of Digital Performance Analysts where I posed both of these questions. We had discussions in small groups about the benefits, the risks and the measurement.

Here are some things to measure

- How many questions there are in the service, how complicated are they, and whether users need to give us information that they would have to go away to find.

- What the current Completion Rate is for the service. Meaning what proportion of users who start the service get all the way through. Does it feel low, compared to similar services?

- What the current Time to Complete the service is. Does it feel high, compared to similar services?

- How many sessions it takes a user to complete the process now.

- Whether users are looping through the pages of the process, which might indicate that they are confused by the questions.

- Who the users are. Their caring commitments, or familiarity with technology might mean that spending 20 minutes on a digital service is not possible for them.

- What the drop-out points are, and whether they link to the questions where people might need to go away and find something. This might help you iterate the service as well as understanding whether there’s a need for Save and Return.

- Which pages users spend a long time on, or have lots of errors on them, and what is causing those errors. This might indicate that we need to explain things better, especially if the pages with errors are also the pages people exit the service from.

- Are we getting lots of phone calls from users who tried to complete the process online but gave up?

- If there are questions where people need to go away to find out the answer, try iterating the content that tells them what they need. A/B test these iterations.

There’s no easy answer to “Do we need a Save and Return function?”. But hopefully these prompts will help services to make a data-driven decision.

If you’ve worked on a service that implemented Save and Return, I’d love to know how you made this decision! Leave a comment please.