Aiming to be data-centric

Like most organisations today, the Ministry of Justice (MoJ) wants to use its data more effectively. The goal is to make sure that people making decisions have the insights they need at the right time to guide their decision making, whether that’s front-line prison staff or senior civil servants.

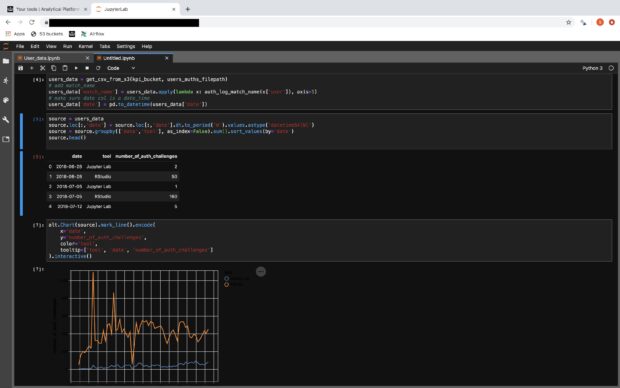

Using our new cloud-based Analytical Platform, we can develop open source tools to automatically manipulate and reshape data, run algorithms and analysis on it, and funnel the outputs to decision makers.

However, a common barrier to this process is the use of legacy systems and technologies.

Working with legacy systems

Within an organisation using legacy systems, the typical journey for a dataset starts with finding a colleague that has limited access to an outsourced database to extract a snapshot of the data. This snapshot is then transferred between analytical teams via email. At each step of the way it is adapted, cleaned, and corrected, often with manual ‘point-and-click’ processes.

The data is saved separately by each team in their own location on the corporate file system, meaning duplication of copies at the same time as a lack of version control. Often the data can only be obtained and cleaned at specific times or frequencies, like once every few months, which is hardly ideal for decision making.

When challenged, we often can’t say exactly when the data was extracted or fully describe what has been done to the data during processing. The manual steps mean that we can’t be completely sure there were no errors at each stage.

Overall, we end up with multiple copies of the data in different places, that are not consistent with each other and have a knock-on risk for data security, and we can’t be confident of obtaining the exact data used to inform previous decisions, should we need to revisit them.

If we can’t audit and reproduce the process of getting insights from data, then all the decisions are at risk of being made with unverified and possibly false information introduced by these legacy ways of working.

Fixing this problem is the opportunity of a new kind of data professional: the data engineer.

Data engineering

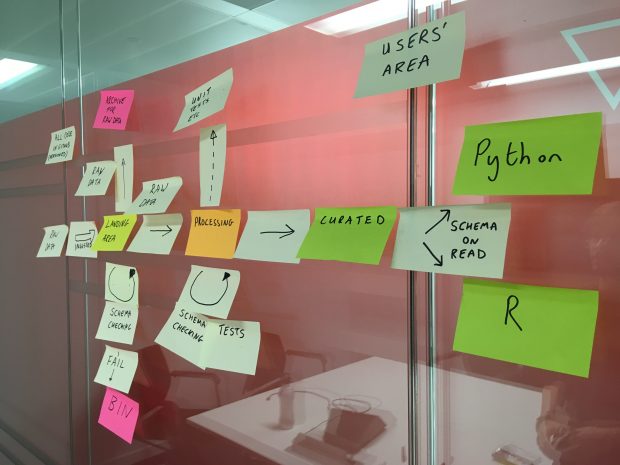

Our data engineers use modern tools and technologies to replace the legacy data journey described above with a single data pipeline.

They get the data in as close to its raw form as possible by setting up an automated feed directly from an operational database. They timestamp the data and transfer it to secure cloud storage, such as Amazon Web Services (AWS) S3 buckets.

They set up processes to clean and manipulate the data by writing version-controlled code (for example, using Python) that automatically provides us with an audit trail.

And ultimately, they provide our analysts and data scientists with a consistent copy of the final version of the data, accessible either in the cloud or offline depending on their requirements.

Creating this pipeline means that data can be processed automatically and be as up-to-date as decision-makers need. There is a single version of the truth for any given dataset and audit trails that take us back to the historical snapshots of the raw data. If we need to correct or update our data processing, we can easily do this. Even better, we can retrospectively apply these changes to historical data to recreate the archive anew.

Back to the future

This way of working unlocks an opportunity for our data engineers to incorporate machine learning processes or other analytical approaches into the pipeline and automate the results at scale.

So far, it has enabled us to automate the monthly forecasting of demand in our courts and provide a daily assessment of prison violence risk to frontline prison staff. Our data engineers are able to deal with data in novel forms like GPS coordinates or video, use fuzzy matching techniques to link up data across our services, and automatically detect and redact personal data where appropriate.

The opportunity is endless. Data is not going away. Public sector organisations have vast resources of data on the way that they interact with citizens. And they can use them to make better services.

The role of the data engineer is crucial for building better services for users!

If you are interested in data engineering you can register for the Government Data Engineering meet-up. Or even better, you can apply to be an entry level, senior or lead data engineer with the Ministry of Justice!