Community work: remote

Like all communities across government, performance analysts have been working remotely, and on 12 August we organised our first entirely virtual event. 70 colleagues joined from more than 25 organisations across government to share their work and reflect on a fast-paced 6 months.

This was our first meet-up since November 2019, and it was great to be back together as a community. The event was a good opportunity to welcome newcomers, celebrate our successes as well as discuss the challenges faced across the community and share best practice to overcome them.

We kicked the day off with a community update and virtual coffee session. We then organised 4 other sessions: cookie consent, hypothesis-driven analysis, an update from HM Revenue & Customs and lightning talks.

Cookies and consent

Changes to the Information Commissioner's Office's guidance on obtaining consent for cookie storage in 2019 have led to significant changes in how performance analysts approach the data we use to gather insights. In this session, we discussed how the community is addressing cookie consent and the different data challenges that are affecting the content and services on all government sites.

One particular challenge that we discussed was how best to talk about the impact of these changes to people that use the data outside of our community, and how we can add more context when presenting findings. With its wide-ranging effects across all parts of the community, this is definitely a topic for future cross-government collaboration.

Hypothesis-driven analysis

Hypothesis-driven analysis is being utilised at Government Digital Service (GDS) within the Brexit Content Team. Managing such high-profile content with multiple interests both inside and outside of the team was proving challenging. A performance analyst and user researcher worked together to develop a testing framework in order to optimise content while ensuring all voices, skills and experiences were utilised effectively.

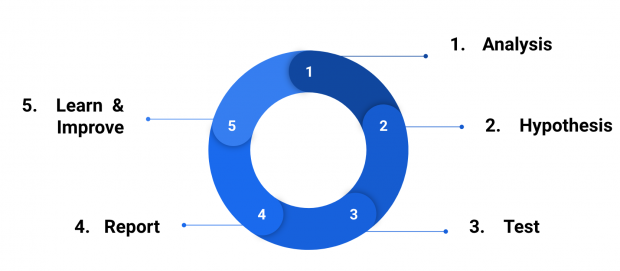

The testing framework is by no means revolutionary, but its structured cyclical process makes it extremely effective. The 5-step process enables teams to identify areas for improvement in a product, collectively hypothesise improvements, and test them.

Results can then be analysed, supported by additional user research when necessary. Findings can be shared widely afterwards and any product or service improvements may be implemented. This was also a great example of how user researchers and performance analysts can work effectively alongside one another.

Lightning Talks

In this session we were lucky enough to have 2 short talks from outside of the Performance Analysis community. We had a talk led jointly by a content designer and a performance analyst about the data training programme developed for content designers on GOV.UK. The programme aims to train designers to understand the different metrics that can be used to measure performance of content and identify potential improvements.

We were then joined by a user researcher, who talked us through the different ways that users navigate through content. In particular, they asked us to think about users who have different levels of expertise, both with the content that they’re reading about and the technology they’re using to engage with it.

Users with high levels of digital confidence are often more comfortable with using search to navigate quickly in and out of the most relevant pages. Users who might be less comfortable, either with the technology or the content, tend to use a ‘hub and spoke’ model, where they use on-page navigation to move from central pages out to the most relevant content.

Where do we go from here?

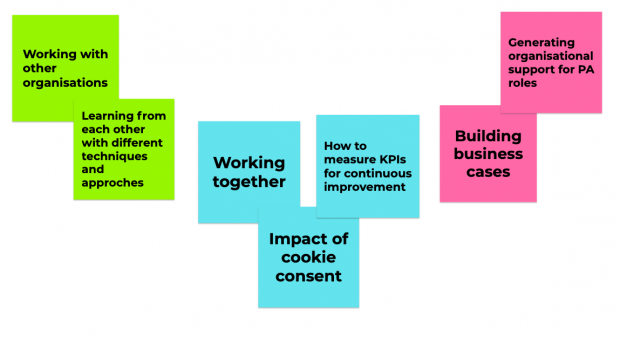

During the virtual coffee session we asked groups to discuss what they would like to see from the cross-government community over the next year. We identified 4 key themes within the feedback: cookie consent best practice, the role of a performance analyst, sight of what is going on in other departments, and how we join up with other communities of practice like user research and data science.

As coordinators we conducted a retrospective to assess what went well and what could have gone better. We agreed we had strong engagement from across the community, and the event itself ran smoothly with limited technical problems. To improve the event we agreed it would be beneficial to hear from more performance analysts across a greater number of departments; this could be encouraged by shorter presentation slots.

Future meet-ups

The next performance analysis community of practice meetup will be held (virtually) in November and co-ordinated by our colleagues in HMRC.

If you are a performance analyst, data analyst or from another related profession in the public sector, please join the community on basecamp. You may be added by someone already on basecamp from within your own department or contact performance-analysts@digital.cabinet-office.gov.uk if the department has not yet joined the community!