Time flies. In 2014 I blogged about Time for a rebrand: Product analysts become Performance analysts. At the time, I wrote:

"In GDS, we focus on understanding user needs and measuring how well our content and services perform in meeting those needs. To make [our role] clearer, especially when we're looking for people to work with us, we have a ‘strapline':

“A performance analyst works with digital and other data sources to derive actionable information and insight for product owners."

Four years later, I thought it would be useful to review how far we’ve come and the challenges ahead.

People and capability

Back in 2014, there were about 7 performance analysts in the Government Digital Service (GDS) and an immature community elsewhere. Today, there are some 20 performance and data analysts in GDS and an active community in many departments and agencies across Great Britain from Edinburgh to Swansea and Plymouth.

In 2017, the role of performance analyst was formalised within the Digital, Data and Technology (DDaT) Profession Capability Framework.

To support the role and build capability, I organise 6 meetups each year for the cross-government performance analysis community (x-GPA). These events each attract 50 to 60 attendees to show and tell strategic challenges, best practice and reusable take-away tips.

By way of a taster, at the next meetup in Newcastle in December we’ll be focusing on the latter, including:

- understanding how changes to GOV.UK content can impact your service

- what we learned about how to make data more accessible

- an unconference session for anyone to ask general or technical questions about Google Tag Manager

- working together for better outcomes - some 'good' and 'not so good' examples from internal assurance assessments

The community also provides training for service managers, new recruits to digital roles and to DDaT Fast-Streamers.

But what do we actually do?

The DDaT role description starts off stating the obvious: ‘A performance analyst conducts analysis.’ And indeed we do. But it’s no good doing analysis in isolation and it’s not effective to spend a lot of time working in response to questions like ‘Tell me about this page?’

The analyst can beaver away second-guessing what the requestor wants to know and then find the analysis has no traction.

Our work has a number of elements - especially when working in and with agile development teams.

- Identify earlier analysis or landscape data that can go together with user research to inform user needs

- Help develop hypotheses that test whether a shipped feature is working and then identify what metrics to use to test them

- Identify data sources that you need and collect them

- Do the analysis - making sure it’s as accurate and relevant as possible

- Tell actionable data stories - working with user researchers to provide richer insights about the performance of the feature or service

We bring this together as a ‘performance framework’, which we like to kick off early in a mission.

So the scope of our work and our skills has expanded. We increasingly work with wider data sets than digital analytics - for example, user feedback, call centre data and back-end data.

We increasingly work with more advanced tooling including BigQuery, Python and R and data visualisation software. Several of us have been on the Data Accelerator programme. Softer skills of engagement, facilitation, influencing and presentation are also very important.

Standards and guidance

We’ve done a lot of work both informally and through the Service Manual and Service Assessments to change the culture around performance and data analysis. The data points are towards the end of the current Digital Service Standard and tend to be discussed at the end of service assessments.

This seems to have given a subliminal message that these points are more about reporting. So we’ve rewritten a lot of guidance in the Manual to encourage teams to think about data much earlier in the product life-cycle and to be ready to talk about data at Discovery and Alpha stages.

Opportunities ahead

To better support Service Design, we need to be more clever at collecting data and measuring the performance of the whole service - for example from applying for the right visa to leaving the country on time.

As our service design colleagues develop design patterns, we should look to design ‘analytics patterns’ - best practice ways of measuring how they’re working in a specific context.

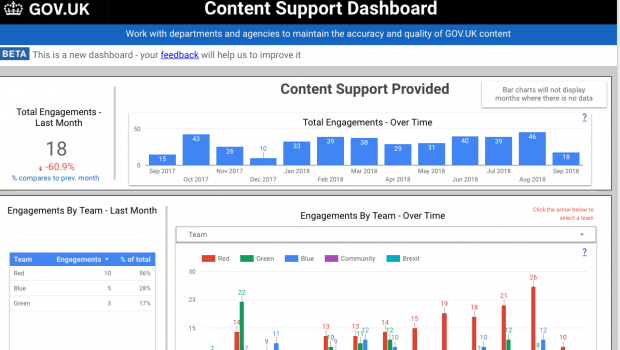

How do we democratise data? We need to make both data and our analysis more accessible to colleagues so they can self-serve and free up time for both them and us. At GDS we’ve been doing a lot of work in building dashboards and building data into tools. But it’s important to focus on training and explaining the context and caveats to data too.

We’ll continue to work closely with other colleagues to enhance overall insight. For example in GOV.UK, performance analysts and data scientists have worked together to combine advanced data analysis skills with domain knowledge about digital analytics to identify bot behaviour.

And we’ll be working even more closely with our user research friends to combine quantitative data analysis (the ‘what’) with a deep understanding of user needs and drivers (the ‘why’) to enrich the insights that will enhance user-centred service design.

Peter Jordan is the Head of Performance and Data Analysis at GDS.