Yesterday the Office for Statistics Regulation published their review endorsing a reproducible approach to government statistics.

To get a better understanding of how programming and software engineering is currently used in government analysis and research we ran the Coding in Analysis and Research Survey. Like any good dataset, it has left me with more questions than answers. Below I am hoping to clarify what reproducible analysis is and why we think it’s good.

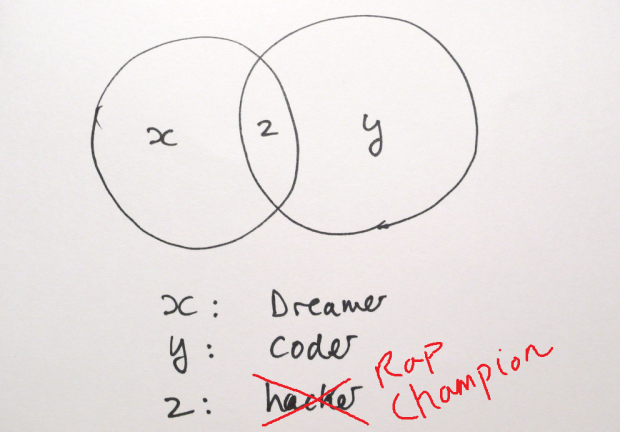

RAP is software engineering in analysis

Reproducible Analytical Pipelines, or RAP as it’s known in many of the communities that we support, is a shorthand for the usage of some software engineering good practices in analysis and research.

We're borrowing tools from software engineering to make analytical code more robust, and we're encouraging people to ditch spreadsheets for programming languages, where that’s appropriate. Our aim is ease of reproducibility, quality assurance, auditability, adaptability and sustainability of our analysis.

RAP has high return on investment

Many departments have begun the journey to develop some software engineering capability in their analytical directorates; all the departments that have done so report significant dividends from the investment.

This return on investment not only comes from the increased trustworthiness, quality and efficiency of the analytical products but also the increased capability of the business area. This increased capability in software engineering skills helps analysts move towards more data-science oriented skill sets, which bring further returns in the future as they are deployed.

RAP depends on analysis as code

Critical analysis should be moved away from spreadsheets as complex spreadsheets often lead to avoidable and impactful errors. Spreadsheets aren’t necessarily the problem, it's just that they are put to uses that they aren’t intended for. Users of spreadsheets must wade uphill to quality assure them. Ray Panko suggests that almost 90% of spreadsheets in the wild have errors.

Spreadsheets - with their point and click menus and dragging - are difficult to make reproducible. Code is easier to reproduce. There are still difficulties of course, but analysis as code is a better place to start.

We shy away from proprietary tools because we feel that users should be able to inspect our working regardless of whether they have access to expensive, shiny software. Python and R are fantastic programs with vast troves of existing software that show that we rarely need the closed source stuff.

RAP is more than writing clean code

To the person that wrote that RAP is a redefinition of documenting your code so that another person can read it, edit it, and not mess it up - that's certainly one of the principles. But programming best practice is more than clean code.

Many tools have been developed that make programming best practice easier to do such as version control, and continuous integration and deployment. We advocate these too, not just clean, well-structured code; although it's harder to do the former without the latter. To help analysts implement these, the Best Practice and Impact division have published guidance for Quality Assurance of Code for Analysis and Research, known as the Duck Book amongst government analysts.

RAP is for the users

Users want analysis to be high-quality, trustworthy and valuable to them. If you have done your duty. RAP helps you to do this by easing quality assurance procedures, opening your process to outside scrutiny, and delivering more for less.

It is the analytical products that fulfil the RAP principles; not the people making them. Any team can make a product that adheres to these principles, no matter who is in it.

RAP brings the analyst closer to the analysis

Analysts still need to interpret the data that they're reporting. This is the bit of analysis that really adds benefit for the users of the reports. Automating this wouldn’t be valuable.

We advocate that we write our reports in R markdown, or Jupyter. This is not to say that we expect the reports to be the same year-on-year. Coding your report in R markdown is not so much an automation as providing a direct link between the calculations and the report without some poor analyst having to copy-paste the numbers between a spreadsheet and a document and then asking another analyst to check that this has been done correctly.

RAP is useful for ad hoc analysis too

Production analysis is clearly aided by introducing RAP principles and will benefit from the continuing efficiencies that good automation provides. However, ad hoc analysis can also benefit from lessons learned in software engineering - the main driver moves from efficiency to increased trustworthiness, better documentation and stronger guarantees of quality.

For ad hoc analysis, we think that minimising our manual steps and ensuring that our outputs are disposable are just plain good sense. And good use of version control will let you know why you made all those decisions at those particular times so that future you or your team or the poor soul that follows you can follow your line of thought.

There is a significant cost involved in developing the capability to get to RAP. We acknowledge it might be prohibitive for some ad hoc analysis. But the benefits of the transparency, the documentation, and the quality assurance outweigh that cost when the stakes are high. Teams that use the RAP principles by default are better prepared when crises occur.

RAP is best done one byte at a time

One of the concerns we hear most when beginning work with a team is that they're worried about the huge investment needed to begin bringing RAP into their work. We always respond that you can often approach building a RAP product by considering one set of steps at a time.

We advise people to start on the riskiest or most manual (read: boring) parts of the production process. Approaching RAP in this way is less scary and also shows its value much earlier. The team can use their new knowledge to better decide how to approach the rest of the pipeline.

A RAP isn't a RAP unless there's someone there to see it

Analysis managers can be worried that we will be creating a black box that cannot be seen into. But a black box means there isn't adequate documentation, or understanding of the changes, or capability to modify the product within the team – in opposition to our RAP principles.

The more you invest in developing these skills in your teams the less likely it is you invent a black box. But there is an initial leap into the unknown. I hope you agree that that leap, broken down into a series of small jumps, is worth it.

If you want to champion RAP in your department or learn more about the work we’re doing please contact The Good Practice Team.