Reproducible Analytical Pipelines

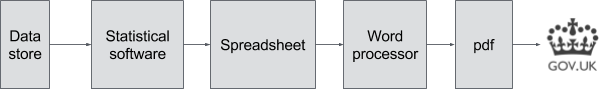

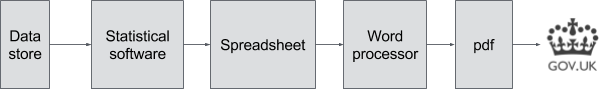

...as statistical publications are usually produced by several people, so this process is likely to be happening in parallel many times. A key element in this process is quality assurance...

...as statistical publications are usually produced by several people, so this process is likely to be happening in parallel many times. A key element in this process is quality assurance...

...and decision-making more transparent. Why use open source code for data analysis? To summarise many other articles on the benefits of using open source code for data analysis: Analyses and...

...code. March - publish Renewals front-end code. May - publish a reworked new application form. Any appropriate new components that can be extracted will be open sourced. June - open...

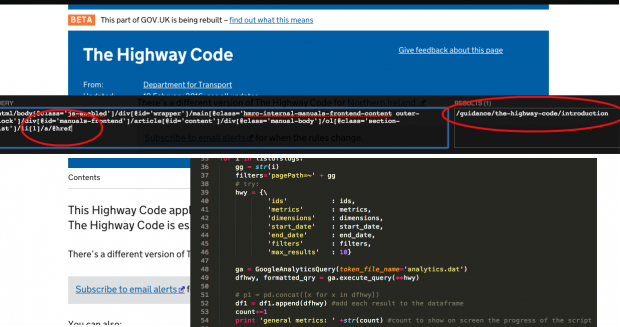

...of code working properly you can very quickly reuse the same code again, changing it to collect different metrics and dimensions. For example in the complete script I have quickly...

...services. The panel recommend that work should be undertaken to visualise a clear end-to-end user journey, including these inconsistent elements, to fully identify issues with the fact that these elements...

...to do this the first time you run it The script needs to be saved and authorised The code You can paste the code straight into the script editor window....

...its new patient-facing service will start publishing code as that part of the service moves into beta. The panel were satisfied that there was sufficient potential to meet the requirement...

...I was new to git but it was very useful to use these new skills to share my code with my mentor. Make sure that your data is ready to...

...suites above building a new separate interface. The panel was impressed that the service have already got commitment from the 3 vendors of custody software to integrate with the new...

...of course, but analysis as code is a better place to start. We shy away from proprietary tools because we feel that users should be able to inspect our working...